In a broad sense, the Internet of Things (IoT) refers to the concept of interaction among physical objects across three levels.

Lower Level: Sensors that continuously record various quantitative indicators such as temperature, pressure, and levels within human environments, devices, and the surrounding world.

Middle Level: Transceivers and hubs that collect, store, and transmit data from the lower to the upper level.

Upper Level: Software that interprets the data gathered from the lower level and makes decisions that impact our lives.

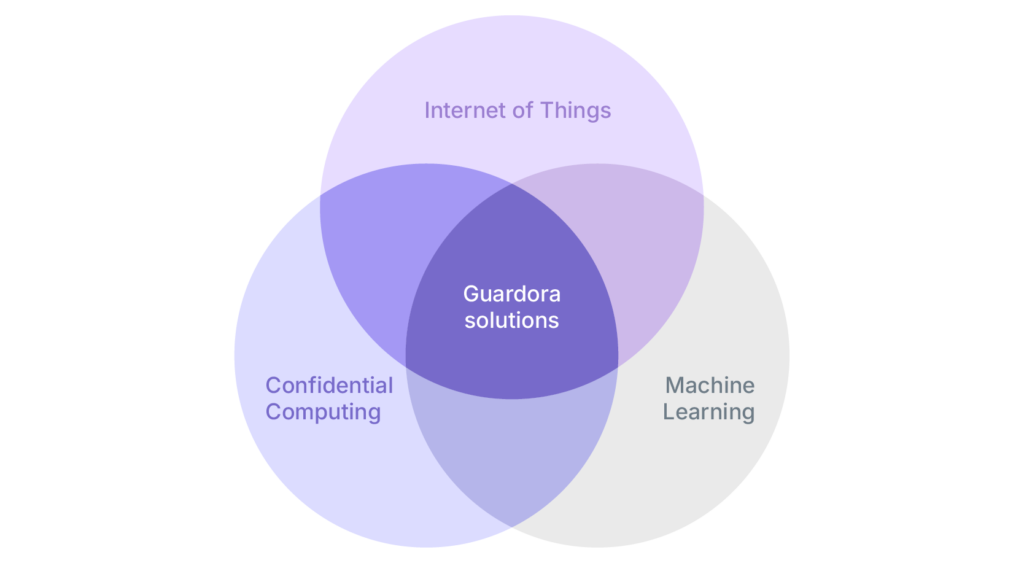

This article will focus on Guardora's solutions for protecting IoT data used in Machine Learning.

Sensitive Data in the Internet of Things

Broadly speaking, sensitive data includes any information that can be used for unauthorized actions against individuals and companies.

In the Internet of Things (IoT), examples include geolocation data, health metrics from wearable devices, biometric information, and the technical status of personal vehicles and other gadgets.

In the Industrial Internet of Things (IIoT), sensitive data encompasses any information from devices, assets, and fields that is deemed confidential.

Here are some cases where we encountered the combination of all three domains: IoT, ML, and Confidential Computing

- Predictive analytics models for industrial equipment status. Streaming data from sensors that allow you to tell how soon a machine will fail. The goal is to prevent the moment of failure or advise the operator-technician on what must be done to improve the repair and maintenance process.

- Predictive risk assessment models for drivers of various vehicles. These models assess risks associated with drivers of all types of vehicles, from scooters to cars, for the purpose of insurance scoring. The primary data set is collected through a mobile application. The type of mobility is determined using real-time data on speed, gyroscope acceleration, and route geolocation. The data includes GPS coordinates, speed, direction of movement, accelerometer readings on three axes, details about the user’s movements, and any accidents they have been involved in. These data sources include companies selling car alarms with tracking functions and some corporate clients. Car alarm vendors often segment tracks into short pieces, believing that monitoring stop points of individuals can eventually identify them—revealing where they live, work, etc. Even without knowing the person's name, there is a good chance of indirectly identifying the user, leading to potential privacy issues. This track segmentation, however, makes the data very cumbersome to use.

- Large automotive data. The value of large automotive data extends beyond assessing traffic accident risks to identifying commercially useful patterns. These include where people drive, stop, shop, and refuel. By uncovering such patterns, it is possible to form user cohorts of interest to third-party companies, retailers, oil companies, and entertainment providers. These large datasets are collected by major independent companies and processed using secure multi-party computation protocols or federated learning methods.

- Environmental monitoring. On one hand, the government, scientists, and society are interested in improving the quality of ML models. However, companies that significantly impact emissions are reluctant to share open data due to fears of leaks, sanctions, and reputational damage.

This topic and market are vast and leave many open questions to address, such as:

- Is it advisable to combine data protection and preprocessing using ML methods at the lower and middle levels? Or is it sufficient to encrypt data from the lower level for transmission to the upper level, where the full processing cycle can be performed?

- For which IoT tasks is data anonymization sufficient, and in which cases is it necessary to ensure the confidentiality of every byte of transmitted traffic? How significantly does anonymizing parts of the data degrade the quality of the ML solutions developed from them?

- Is it possible to standardize approaches to data confidentiality to unify the developed software and ensure its operability regardless of the manufacturer of sensors, transceiver devices, and data storage? Or is it impossible to organize interaction without the participation of specialized integrators?

- How can we assess the security of using Edge Computing to save traffic and model response time? And can we even speak of data security in this case?

- Is it possible to combine non-confidential data transmitted in the open with protected confidential data when building ML solutions? Is the approach viable where only part of the data stream is protected, making it impossible to reconstruct the full picture?

If you are interested in experiments on this topic, join our community on Discord to participate in discussions and pilot projects.