Federated Learning (FL) has already become an essential tool for building secure systems capable of processing data locally on devices. However, one of FL's most remarkable features is its potential to enable cross-platform solutions. This article explores how FL adapts to heterogeneous devices, the technologies that ensure cross-platform compatibility, and how this interoperability opens new horizons for the further development of FL.

Orchestrating the Transition: From Centralized Servers to Local Processing

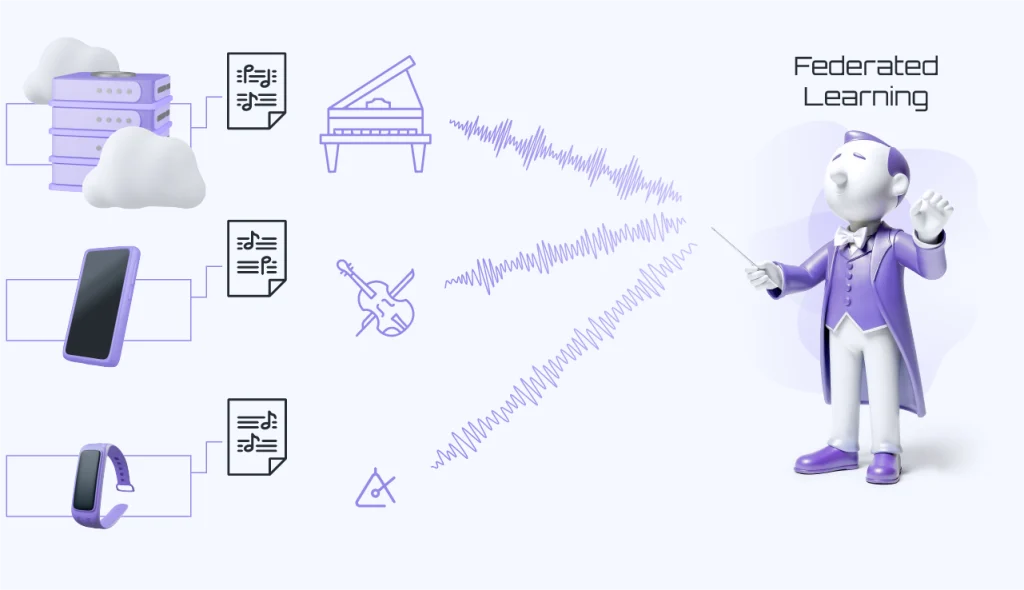

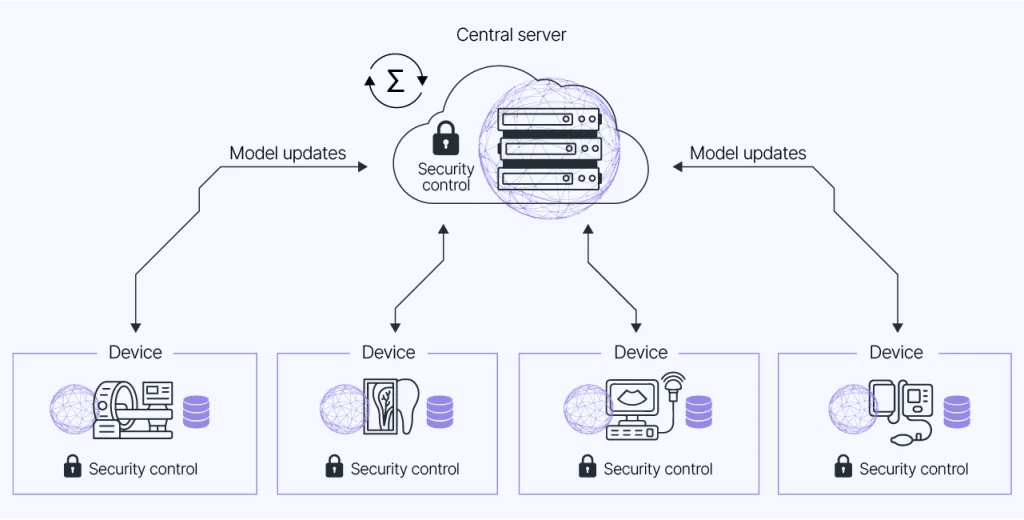

Traditional machine learning methods involve collecting data from user devices and processing it on centralized servers. This approach increases the risk of data breaches. Federated Learning offers an alternative: data remains on devices, and models are trained locally. Only model weight updates are sent to the server, where they are aggregated into a unified model. This approach preserves data privacy while reducing the amount of transmitted information, which is particularly important for devices with limited bandwidth.

A parallel can be drawn to orchestrating a symphony, where each musician plays their part without sharing their original sheet music with others, yet together they produce a harmonious performance.

FL is perfectly suited for heterogeneous devices:

- IoT Devices: Sensors, cameras, smart speakers, and other devices with minimal computational resources.

- Smartphones: More powerful than IoT devices but constrained by battery capacity and bandwidth.

- Cloud Servers: High-performance systems with powerful processors.

This ecosystem resembles an orchestra, where each musician plays their unique instrument (from the bright chime of a triangle to the deep resonance of a grand piano), and the conductor (the central server) unifies their efforts to create a harmonious symphony. This "computational orchestra" allows devices with diverse capabilities to work together, striking a balance between performance and data privacy. However, achieving such harmony requires addressing complex technical challenges related to ensuring device compatibility and system resilience.

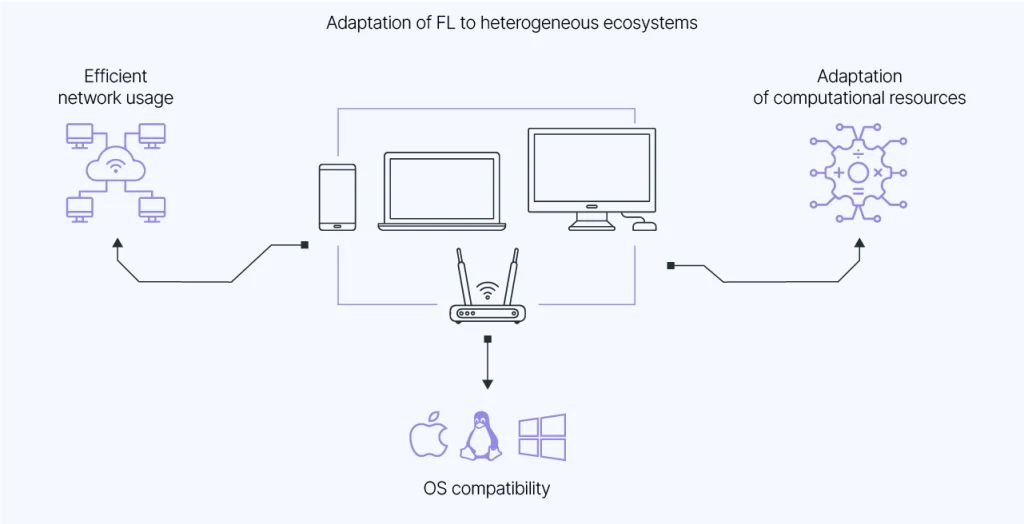

A Symphony of Compatibility: How FL Adapts to Heterogeneous Ecosystems

Integration challenges arise with the variety of devices involved in Federated Learning (FL). Just as each instrument in an orchestra has its unique sound and tuning requirements, FL must adapt to devices that differ greatly in performance, architecture, and capabilities.

Heterogeneity in Computational Resources

Devices participating in FL range from 8-bit microcontrollers in IoT to cloud servers equipped with GPUs and NPUs. To ensure the "learning melody" remains harmonious, adaptive approaches are employed to accommodate the characteristics of each device. Here are some of them:

- Quantization: Similar to translating a complex musical score into simplified notation, this technique reduces the precision of numerical representations in models, lowering resource requirements without significant quality loss.

- Pruning: By removing "redundant" model parameters, this approach simplifies the model, akin to reducing the number of instruments in an orchestration for more streamlined performance.

- Federated Distillation: Instead of transmitting full model weights to the server, only predictions (soft labels) are sent. This minimizes data transmission and lightens the computational load on resource-constrained devices.

- Adaptive Aggregation: Rather than uniformly averaging updates, the server weights them based on device-specific factors such as local data volume, update quality, or computational capabilities. This results in a more balanced and accurate global model.

- Personalization: Allows the global FL model to be fine-tuned for the unique characteristics of each device and its local data.

Heterogeneity of Operating Systems and Architectures

Federated Learning (FL) must operate seamlessly across platforms with diverse operating systems (Android, iOS, Linux, RTOS) and processors (ARM, x86), much like musicians speaking different languages. For this reason, universal tools are developed to ensure mutual understanding among all participants:

- Universal Libraries: These abstract the differences between platforms, acting as translators that enable systems to communicate while preserving their unique features.

- Standardized Communication Protocols: Standards like MQTT and gRPC serve as a common language for interaction between diverse devices.

- Containerization: Similar to carrying an instrument in a protective case, containers isolate software from the specifics of the operating system, ensuring compatibility across environments.

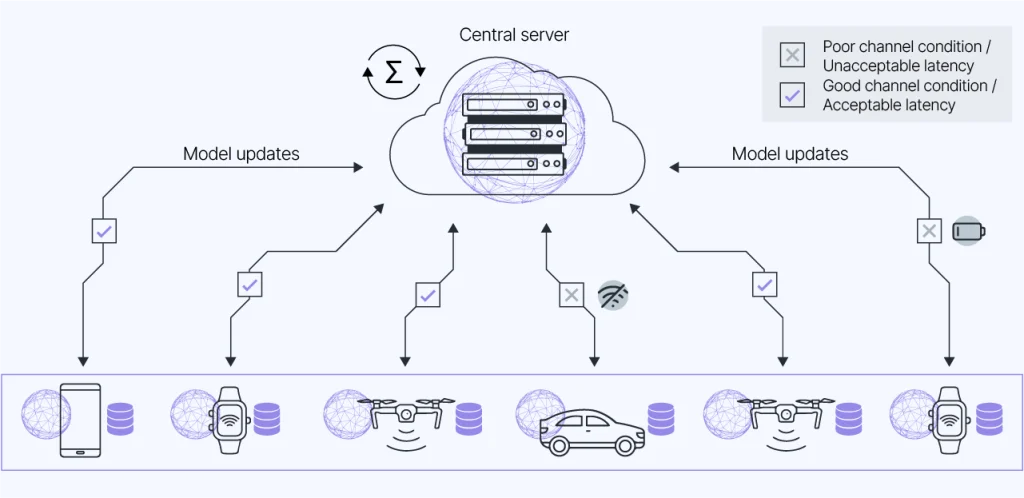

Limited Network Resources

Weak connections, high latency, and low bandwidth are common challenges for many IoT devices. This can be compared to an orchestra where some musicians play with less intensity, "lag behind," or even miss their parts entirely. To address these issues, FL employs several approaches:

- Gradient and Update Compression: Similar to sharing short notes instead of the full score, model updates are transmitted in a compressed format.

- Data Caching: Devices temporarily "store their parts" and transmit them when the connection stabilizes.

- Asynchronous Training: Devices work independently, ensuring the overall process isn't delayed by the lag of a single participant.

The Polyphony of Challenges: FL Solutions Across Industries

Federated Learning (FL) is gradually becoming an indispensable tool for uniting numerous devices and platforms across various industries. Each industry resembles a unique orchestra: IoT is like a chamber ensemble, where resource-constrained devices play a synchronized melody; healthcare resembles a symphony orchestra with massive datasets and stringent regulations; telecommunications is akin to a jazz band, adapting to constant changes. Let’s take a closer look at how FL addresses the challenges in these diverse fields and creates harmony in their operations.

IoT: A Chamber Ensemble of Technologies

In the IoT sector, FL functions like a chamber ensemble, where devices with limited computational resources—smart sensors, thermostats, and cameras—perform their roles locally, sharing results with a central node. Imagine traffic lights learning from local traffic sensor data to optimize flow, while air quality sensors create pollution maps without transmitting sensitive data to the cloud. In smart homes FL acts as a diligent tuner, allowing smart thermostats to adapt to residents' habits, maintaining comfortable temperatures without sending personal preferences to centralized storage.

However, behind the scenes of this harmony, there are serious technical challenges that need to be addressed.

Key Challenges:

- Weak Devices: IoT devices, such as temperature sensors or smart plugs, are "musicians" with limited capabilities. Their computational power and energy are quickly depleted, especially when performing complex training tasks.

- Unstable Network: Frequent disruptions and low bandwidth can interfere with system performance.

- Data Privacy: Sensors may collect sensitive information, such as user behavior, requiring strict adherence to security standards.

To ensure this "chamber orchestra" plays in harmony, FL offers several solutions that account for the resource constraints and specific requirements of IoT:

- Lightweight Models and Simple Algorithms: FL employs compact models, akin to simplified scores, which require fewer resources to execute.

- Local Data Processing: Like a musician performing their part on-site, IoT devices process data locally, minimizing the amount of transmitted information and reducing network load.

- Differential Privacy: To protect data, noise is added, making it difficult to identify the data source while preserving its utility.

- Asynchronous Training: If one musician is temporarily unavailable, the ensemble continues playing without waiting for them to return. Asynchronous training allows devices to update the model independently, reducing delays.

- Energy-Efficient Algorithms: Optimized training extends the battery life of devices, particularly those running on limited power sources.

Healthcare: A Symphony Orchestra of Data

Healthcare, with its diverse range of devices and data, can be compared to a symphony orchestra, where each section—clinics, medical devices, and sensors—works in unison to achieve optimal outcomes. FL enables the integration of data without compromising patient privacy. For instance, patients with diabetes use glucometers and fitness trackers to monitor blood sugar levels and physical activity, and FL trains models that provide personalized recommendations based on this data.

Key Challenges:

- Heterogeneity of Medical Data: Medical devices use varying data formats (e.g., fitness trackers from different manufacturers or medical equipment adhering to different standards), making data integration challenging.

- Regulatory Constraints: Laws such as HIPAA and GDPR impose strict requirements on the processing and storage of medical data.

- Vulnerability of Local Devices: Wearable devices and medical scanners can be subject to attacks, posing risks to data security.

To ensure the orchestra works smoothly, modern methods of protection and standardization must be used:

- Converting data to a single format: Pre-processing data allows it to be standardized before being combined, similar to how each musician tunes his instrument before a concert.

- Reliable protection of data privacy (implementation of these methods in practice is not always easy and can affect performance):

- Differential privacy (DP) adds "noise" to the data to make it difficult to identify.

- Homomorphic encryption (HE) allows computations to be performed on encrypted data.

- Secure multi-party computation (SMPC) enables collaboration without disclosing the original data.

- Strengthening device security: Regular firmware updates and activity monitoring help devices operate securely, preventing attacks.

Telecoms: A Dynamic Jazz Band

Telecoms is a jazz band where devices and base stations operate in a constantly changing environment. Data flows from millions of devices requiring flexibility and precise coordination. FL helps telecom operators improve service quality, predict congestion, and personalize tariffs while preserving user privacy.

Key Challenges:

- Vast Data Volumes: In telecommunications, data comes from millions of devices. Analyzing such massive amounts of information requires significant computing resources.

- High Network Requirements: Transferring model updates between devices and servers requires high bandwidth and minimal response times. Delays or interruptions can reduce training accuracy.

- Data Vulnerability: Data transferred between devices is vulnerable to attacks such as data manipulation or poisoning.

To ensure the "band" works smoothly, it is necessary to use approaches that take into account the specifics of telecommunications:

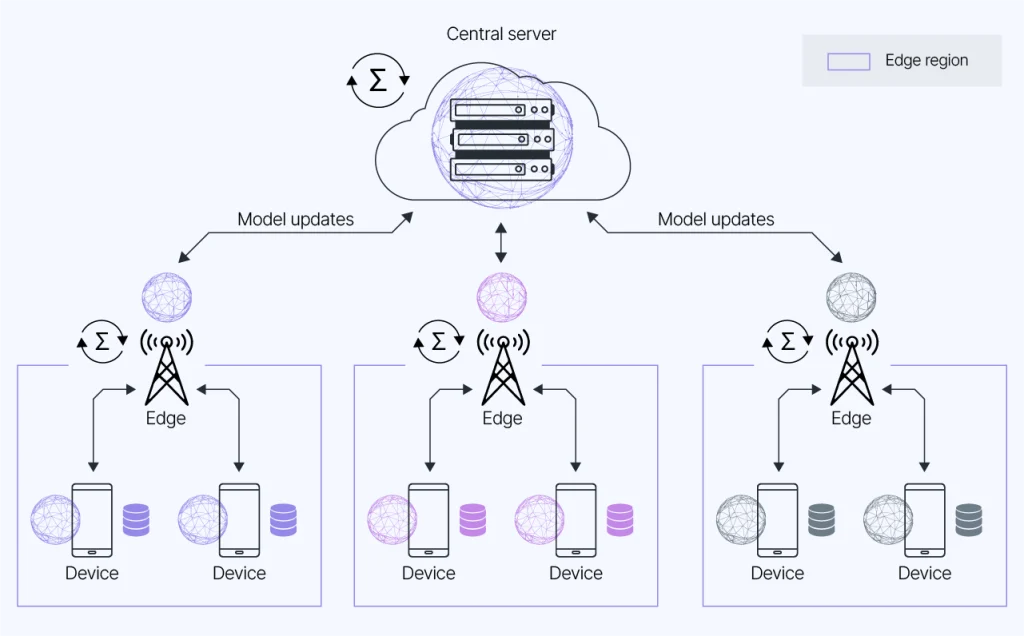

- Hierarchical Federated Learning (Hierarchical FL): This approach resembles the work of a jazz band with several levels of organization: first, musicians in one section synchronize with each other, and then combine into a single composition. In FL, data is aggregated at regional nodes, which send processed information to the central server. This reduces the load on the network and speeds up processing.

- Fault-tolerant protocols: Redundant data copying and replication of updates prevent losses during network outages.

- Anomaly Detection: Methods such as Isolation Forest allow you to identify suspicious data, preventing attacks and manipulation.

- Regular Updates: Regional servers undergo regular checks and updates, which keep the system up-to-date and secure.

New Chords: The Future of Federated Learning

Federated learning (FL) continues to evolve, adapting to increasingly complex heterogeneous device ecosystems. The future of this technology promises many innovations that will help overcome current limitations and unlock its full potential.

Adaptive learning algorithms: Developing algorithms that can dynamically adapt to the diverse computing resources and network conditions of different devices. This will allow for efficient task distribution across devices with different performance levels and ensure stable operation in unstable networks.

Enhanced data protection methods: Optimizing advanced technologies such as homomorphic encryption to ensure data security and privacy even on weak devices. Integrating blockchain with FL contributes to the creation of more reliable systems. Blockchain allows for secure management of trust relationships between devices and ensures transparency of learning processes.

Optimizing communication protocols: Developing and implementing data transfer protocols that take into account the specifics of heterogeneous networks, which will reduce latency and improve the efficiency of information exchange between devices.

Integration with Edge Computing: Offloading some computing tasks to devices located closer to data sources, reducing the load on central servers and ensuring faster data processing.

Standardization and Interoperability: Developing common standards and interfaces to ensure interoperability between different devices and platforms participating in federated learning.

Leveraging Artificial Intelligence to Manage Learning: Using AI to automatically manage the learning process, including task distribution, performance monitoring, and adaptation to changing conditions.

Federated learning becomes a conductor that turns disparate devices into a well-coordinated orchestra. Improved adaptation algorithms, new interaction standards, and integration with future technologies will ensure harmonious collaboration between devices, where even the most modest participants will be able to contribute to the overall “symphony” of data. This opens the way to creating smart, secure, and interconnected systems of the future.